Find Out 46+ Facts About Unnest_Tokens Function In R They Did not Let You in!

Unnest_Tokens Function In R | Emily dickinson wrote some lovely text in her time. Those who want more than 18,000 to pull out the hashtags from the text of each tweet we first need to convert the text into a one word per row format using the unnest_tokens() function. A programmer builds a function to avoid repeating the same task, or reduce complexity. This function takes our input tibble called animal_farm, and extracts tokens from the column specified by the input argument. 3.1.1 term frequency in jane austen's novels.

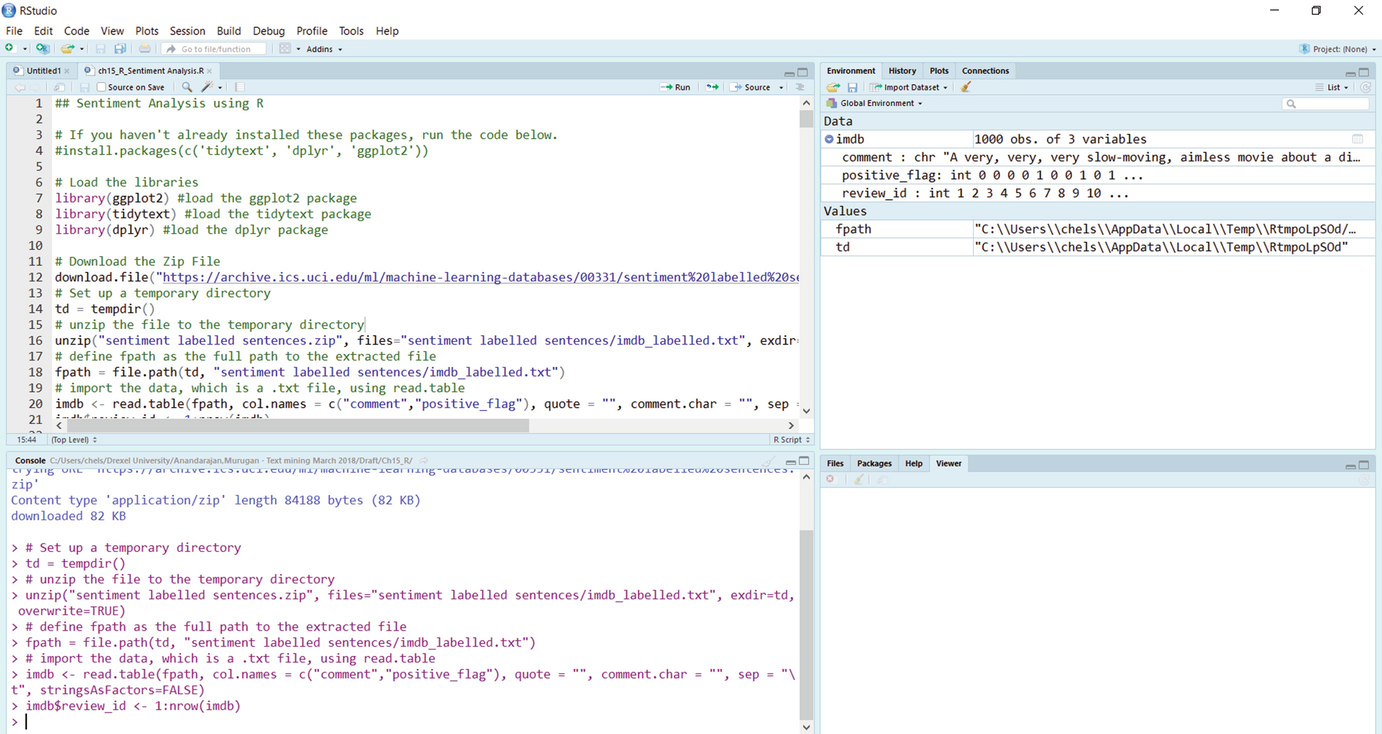

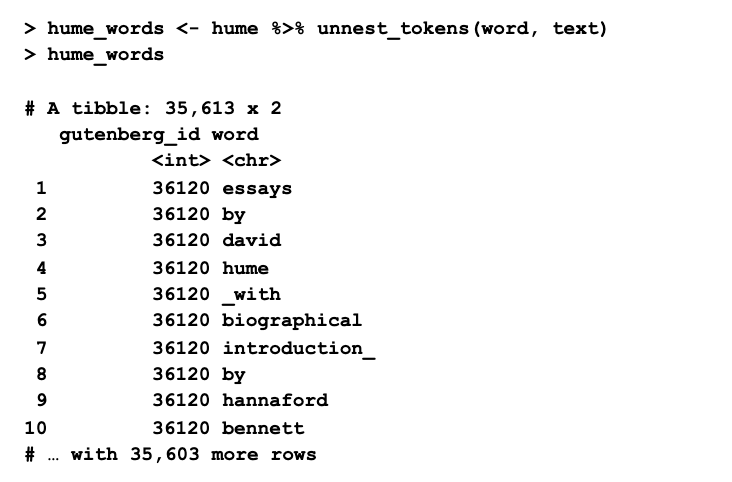

After using unnest_tokens, we've split each row so that there is one token (word) in each row of the new data frame. 3.1.3 word rank slope chart. Unnest_tokens_ is the standard evaluation version. Could not find function unnest_tokens. 3.1.1 term frequency in jane austen's novels.

R matches your input parameters with its function arguments, either by value or by position, then. The from term is recursive, which enables you to chain the unnest clause with any of the terms which are permitted in the from clause, including other unnest clauses. You have no option to configure that currently. From tidytext v0.1.3 by julia silge. The tidytext function for tokenization is called unnest_tokens. And i might be asking too much, but if all the standard commands in r worked with. In the help page it is precised: Those who want more than 18,000 to pull out the hashtags from the text of each tweet we first need to convert the text into a one word per row format using the unnest_tokens() function. These functions are wrappers around unnest_tokens( token = sentences ) unnest_tokens( token = lines ) and unnest_tokens( token = paragraphs ). I would like to know how to reverse the unnest_token function in order to export the tweets and work in python. Unnest_tokens function | r documentation token. I'm trying to split a column into tokens using the tokenizers package but i keep receiving an error: After applying the unnest_tokens() function in the previous code, the rows correspond to tokenized words.

And i might be asking too much, but if all the standard commands in r worked with. I would like to know how to reverse the unnest_token function in order to export the tweets and work in python. The tidytext function for tokenization is called unnest_tokens. I have also quit and restarted r and. These functions will strip all punctuation and normalize all whitespace to a single space character.

Here we have used the default arguments which produce word tokens. The two basic arguments to unnest_tokens used here are column names. A programmer builds a function to avoid repeating the same task, or reduce complexity. I'm trying to split a column into tokens using the tokenizers package but i keep receiving an error: Unnest_tokens_ is the standard evaluation version. # remove punctuation, convert to lowercase, add id for each tweet! Unnesting flattens it back out into regular columns. Unnest_tokens with token = ngrams will use behind the scene the tokenizer and tokenize_ngrams function. I want to used the unnest_tokens function from tidytext in another function. The unnest_tokens() function from tidytext is very flexible. The dataset is not yet compatible with tidy tools (not compliant with tidy data principles). Unnest_tweets( tbl, output, input, strip_punct. R matches your input parameters with its function arguments, either by value or by position, then.

Here we have used the default arguments which produce word tokens. I have also quit and restarted r and. The from term is recursive, which enables you to chain the unnest clause with any of the terms which are permitted in the from clause, including other unnest clauses. Unnest_tokens expects all columns of input to be atomic vectors (not lists) how do i fix this without using the it would indeed be nice to hv a better documentation. These functions are wrappers around unnest_tokens( token = sentences ) unnest_tokens( token = lines ) and unnest_tokens( token = paragraphs ).

3.2 weighted log odds ratio. I am using r 3.5.3 and have installed and reinstalled dplyr, tidytext, tidyverse, tokenizers, tidyr, but still keep receiving the error. Unnest_tokens ( tbl , output , input , token = words , format = c ( text , man , latex , html. Error in unnest_tokens_.default(., word, reviewtext) : In the help page it is precised: This is useful in conjunction with other summaries that work with whole datasets, most. 3.1.3 word rank slope chart. 2 sentiment analysis with tidy data. R matches your input parameters with its function arguments, either by value or by position, then. Unnest_tokens_ is the standard evaluation version. The unnest_tokens() function from tidytext is very flexible. A function, in a programming environment, is a set of instructions. Unnesting flattens it back out into regular columns.

Therefore the number of rows corresponds to the total number unnest_tokens function. We also specify what kind of tokens we want, and what the output column should be labeled.

Unnest_Tokens Function In R: This is useful in conjunction with other summaries that work with whole datasets, most.

0 Response to "Find Out 46+ Facts About Unnest_Tokens Function In R They Did not Let You in!"

Post a Comment